Stuffed Chicken Breast - Nutanix Select Session

Okay, so I know what you’re thinking!

”Uhhh, ExploreVM, what’s with the recipe post?”

And that’s a fair thought. It’s certainly outside of my normal content. The reason for this entry is that I am presenting a session on BBQ for the Nutanix Select program. It seems my Blocks and Bites reputation precedes me. If you’re attending the event, you will find the recipe below! If you’re not, no worries. I will be posting a video grilling this dish on the Blocks and Bites page in the near future!

Nashville Hot Stuffed Chicken Breast

Ingredients

4 Large Chicken Breasts

Pickle Slices (Pickles Cut the Long Way)

4 Thick Slices of Pepper Jack Cheese (Other cheese may be substituted)

One Large Red Onion

Pickle Brine (Purchased independently or used from the pickle slices)

4 Tbsp Unsalted Butter

2 Tbsp Brown Sugar

2 Tbsp Minced Garlic

1 Tbsp Paprika

Cayenne Pepper (1 Tbsp or to taste)

1 Tsp Coarse Ground Black Pepper

3 Tbsp White Vinnegar

Water

Toothpicks, Butcher’s Twine, or Skewers

Utensils

Cutting Board

Butcher Knife

Medium Size Pot

Silicone Spatula/Whisk

Large Bowl

Small Bowls

Brine the Chicken

Wash and butterfly the chicken breasts. DO NOT cut all the way through, it should open like a book when done cutting.

Place the chicken breasts in the large bowl

Pour the Pickle Brine over the chicken in the bowl

Add 1 cup water, pinch of salt, pinch of black pepper, and an optional Bay leaf

Let brine for 2 hours refrigerated

Prepare the Nashville Hot Sauce

WASH THE CUTTING BOARD THOROUGHLY

Slice the red onion into long slices

Place the onion slices into a small bowl and set off to the side

Place the pot on the stove over medium heat

Slice the 4 Tbsp of butter into tablespoon size pieces and place in the pot and being to melt

Add the Brown Sugar and White Vinegar, stirring into the butter

Add the Garlic, Paprika, Black Pepper, and Cayenne Pepper; Continue to sir until the sauce is simmering

Once the sauce is thoroughly mixed, remove from heat

Prepare the Chicken

After the 2 hours, remove the chicken from the brine and place on the cutting board

Open the chicken and place one slice of Pepper Jack cheese, one pickle slice, and a few of the sliced onions

Close the chicken, and use either toothpicks, butchers twine, or skewers to keep closed

These will be flipped on the grill numerous times, so you’ll want to be sure they are closed

Optional - Apply your favorite chicken season or BBQ rub to the outside of the chicken

If you’re using a gas grill, preheat it to 400 degrees. If you’re using charcoal, place a large pile in the center of the kettle and let the coals burn to white.

Place the chicken over direct heat, flipping ever few minutes.

Cook until the internal temperature reaches 165 degrees Fahrenheit; typically around 20-25 minutes

In the last 5 minutes of the cooking, spread the Nashville Hot Sauce over the chicken, flip, and cover the opposite side. Let it cook on for a couple minutes.

Let the chicken rest before serving. Apply additional sauce if desired.

Blocks and Bites Episode 3: Seafood and Tanzu

In this episode of the Blocks and Bites Show Scottie Ray (Principle Field SE - Modern App Platform) and I discuss VMware Tanzu and grill seafood!

Because 2020 is just the year that keeps on giving, you'll notice my video feed has a black box around it. Unfortunately that was a setting that ended up being left over from a previous video project that didn't clear when I finished the project.

If you're interested in some of the show stickers, reach out to me on the platforms below and I can arrange to ship some out!

Would you like to be a guest on the Blocks and Bites show to talk tech and food? Reach out to Paul Woodward (ExploreVM) on Twitter, LinkedIn, or Facebook.

Link-O-Rama:

Show Twitter: https://twitter.com/BlocksAndBites

Show Website: www.blocksandbites.com

Host Twitter: https://twitter.com/ExploreVM

Host Website: https://explorevm.com/

Host Facebook: https://www.facebook.com/ExploreVM

Guest Twitter: https://twitter.com/H20nly

Guest Website: https://crescentcove.networkforgood.com/projects/92582-scottie-ray-s-fundraiser

Music By: https://twitter.com/anthonyrhook

Blocks and Bites Episode 2: Grilling Tech

In this episode of the Blocks and Bites Show Scott Smallie (Data Center Field Systems Engineer) and I discuss the technology used in grilling, as well as the journey to cloud.

I received a lot of positive feed back on the first episode and will be implementing some changes based on that feedback. This episode was already recorded before the first one was released, so you'll notice some big changes starting with episode 3.

I also want to shout out to Anthony Hook for the theme music for the show!

Would you like to be a guest on the Blocks and Bites show to talk tech and food? Reach out to Paul Woodward (ExploreVM) on Twitter, LinkedIn, or Facebook.

Link-O-Rama:

Show Twitter: https://twitter.com/BlocksAndBites

Show Website: www.blocksandbites.com

Host Twitter: https://twitter.com/ExploreVM

Host Website: https://explorevm.com/

Host Facebook: https://www.facebook.com/ExploreVM

Guest Twitter: https://twitter.com/ScottSmallie

Guest Website: http://www.craftedpixelstudios.com/

Music By: https://twitter.com/anthonyrhook

Watch Blocks and Bites Episode 1!

Check out the first episode of the Blocks and Bites Tech Grilling show!

The day has finally arrived! The first episode of the Blocks and Bites show has been released!

This show we cook up chicken wings with our buddy Tim Davis (@vTimD on Twitter) and talk about the impact of Covid-19 on the workforce and cloud adoption.

As this is the first episode, there were some learning experiences thrown at us. Unfortunately, the overhead grill camera had some technical issues and the video was not up to par to be included in the episode. I believe this to be due to a poor quality cable and should be resolved by the next episode.

Would you like to be a guest on the Blocks and Bites show to talk tech and food? Reach out to Paul Woodward (ExploreVM) on Twitter, LinkedIn, or Facebook.

Link-O-Rama:

Show Twitter: https://twitter.com/BlocksAndBites

Host Twitter: https://twitter.com/ExploreVM

Host Facebook: https://www.facebook.com/ExploreVM

Guest Twitter: https://twitter.com/vtimd

Guest Website: https://apperati.io/

Join ExploreVM on July 15-17 for Cloud Field Day 8!

Once again, I have been fortunate enough to be asked to join Tech Field Day as a delegate. In the past I’ve attended Storage Field Day, tech Field Day, and TFDx at VMworld. This go ‘round it will be Cloud Field Day! Historically, these events have taken place live and in person. However due to the ongoing pandemic, Cloud Field Day 8 has gone completely virtual. While we will miss out on the daily rush of traveling from location to location, or the comradery of decompressing at the end of the day at the hotel bar, the virtual aspect has opened up CFD8 to over 20 delegates!

Given the size of the delegate panel, we have been broken into two groups. The “early” group will see sessions from Aruba, Diamanti, Infrascale, Morpheus Data, Veeam, and Zerto. The “late” crew will have presentations from Fortinet, HashiCorp, Igneous, NetApp, and Qumulo. I am participating in the Late crew, and may sneak into some early sessions as well.

All sessions will be streamed on the Tech Field Day website and their Facebook. Outside of the stream, you can follow along on Twitter by using #CFD8. Check back here for posts around each presentation, as well as my fellow delegates blogs.

What questions do you have for the presenting companies? Please reach out on Twitter, Facebook, or email and I’d be happy to ask on your behalf!

VMware vSphere Bitfusion is Here! (Almost)

Bitfusion’s integration with vSphere will launch in Q2 FY2021

As Machine Learning and Artificial Intelligence continues its growth into the modern datacenter, so too must the modern datacenter evolve. In July of 2019 VMware, a leading provider of virtualization and cloud infrastructure, announced their acquisition of Bitfusion to bolster their ability to meet the end users needs. Today, VMware announced that Bitfusion’s integration into vSphere will be available by the end of Q2 FY2021 (end of July 2020).

Bitfusion’s abstraction of the GPU (Graphics Processing Unit) layer offers the ability for administrators to pool GPU resources the same way VMware pools compute, memory, and storage resources. This allows for more efficiency around GPU usage, limiting over and under subscription of AI/ML servers. With the GPU pools, applications can be assigned the proper amount of GPU necessary to run each work load at its peak performance. VMware’s hardware acceleration of the GPU supplies resources faster and more efficiently than Bitfusion on it’s own.

In the first iteration of vSphere Bitfusion focuses on applications around computational math and ML. TensorFlow and PyTorch are called out as some of the best workflow candidates. Similarly, the initial version is not designed for VDI or any sort of real-time applications. Bitfusion works by intercepting CUDA calls via the CUDA API and sending them across the network to be processed in the GPU. At launch, the only GPU supported by vSphere Bitfusion is NVIDIA, but there are other manufacturers on the road map.

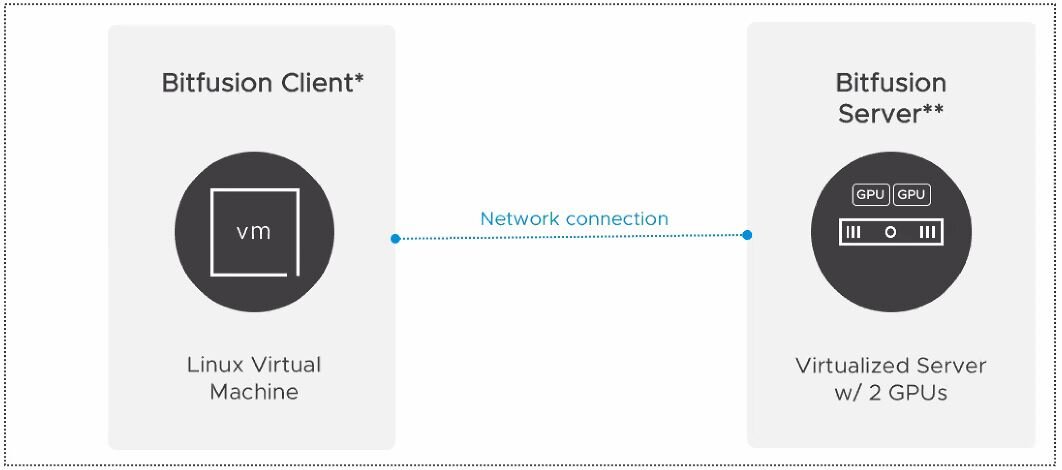

vSphere Bitfusion High Level Architecture

vSphere Bitfusion utilizes a client - server model. The client runs the workload and the server provides the hardware acceleration. The Bitfusion server will run on a virtualized platform and require GPUs. The supported version for the Bitfusion Server is ESXi 7.0. The client will run on a Linux virtual machine. The client can only run on a Linux based Virtual Machine. This client can also be a container and works with any supported version of vSphere 6. and higher. There is no limit to the number of Bitfusion clients and Servers that can exist in an environment. However, each client shares only from one server at a time. Ideally, there should be a maximum of 50 microseconds latency between the client and the server.

The vSphere Bitfusion Server requires vSphere Enterprise Plus licensing. The client requires no other licensing requirements outside of utilizing your existing VMware licensing for the hosts. There are no other changes to the way GPUs are utilized in vSphere. VMwar does warn that this does not apply to any future ASIC or FPGA support around Bitfusion. And as VMware has truly integrated Bitfusion into vSphere, management is performed directly in vCenter Server.

To learn more about VMware vSphere Bitfusion, check out the following links:

Bitfusion Acquisition Announcement

Storage Field Day 18, Here I Come!

For the second time, I'm lucky enough to be selected as a Tech Field Day delegate! This time, it's for Storage Field Day 18 in Silicon Valley February 27th through March 1st.

For those of you unfamiliar with Tech Field Day, it's an event ran by Gestalt IT where independent tech influencers are brought together with vendors to review products and solutions with deep technical conversations. Each event has a particular technology focus, such as networking, cloud, wireless, and security.

Storage Field Day 18 will feature 7* different storage vendors. There's an asterisk as the anticipated number of vendors can grow (wink wink). Expect to see some traditional players like NetApp and Western Digital, and a few newcomers to the scene such as Cohesity, WekiaIO, and StorPool. There's even a secret vendor on the list that even we the delegates are not privy to yet.

SFD18 can be viewed live on TechFieldDay.com with sessions available a short while later on the Tech Field Day YouTube channel. You can also follow along on Twitter with hashtag #SFD18.

For more info about Storage Field Day, including a full list of delegates, check out the site here.

Troubleshoot & Assess the Health of VMware Environments with Free Tools - VMworld 2018

I had the opportunity to present my first VMworld breakout session at this years conference in Las Vegas. Below are the videos of the demos provided during the session, as well as links to the tools discussed. Please do not hesitate to contact me for further information!

Session Description

Based on his highly popular VMUG session, Paul Woodward Jr. (@ExploreVM) will review some of the tools he's used in his career to assess the health and troubleshoot issues with VMware environments. Paul will provide demonstrations and real world examples on how these tools have helped him solve problems that plague every VMware admin. And the best part, these tools are free!

vCheck

Links

RVtools Website

Yellow Bricks - New Version Available

ESXTOP

Links

VMware - vRealize Log Insight

vRealize Log Insight - End of Availability

Other Session Links

vDocumentation

Vester

Veeam One Free Edition

Top 21 Must Have VMware Admin Tools

101 Free VMware Tools

Free VMware Tools

If you have suggestions for tools that should be added to the above list, do not hesitate to contact me via any of the channels provided below.

Do you have an idea or a topic for the blog? Would you like to be a guest on the ExploreVM podcast? If so, please contact me on Twitter, Email, or Facebook.

SuperMicro Build Day Live with vBrownBag

Recently, SuperMicro hosted Alastair Cooke and vBrownBag for another edition of Build Day Live. For those who don't know, "vBrownBag is a community of people who believe in helping other people.". They run weekly podcasts and webinars, and also host live Tech Talks at conferences around the country. What makes the Build Day Live event different is that vBrownBag is on site at the vendor, building a production cluster throughout the event, from start to finish.

SuperMicro Does Networking?

Up until Build Day Live, I had no idea that SuperMicro was in the networking space. They offer a wide array of products from 1 to 100GB in a 1U chassis. These switches are bare metal, and are compatible with the Cumulus Linux networking operating system. SuperMicro also has its own proprietary NOS for the 1GB switches as well. Configuration can be completed via CLI or GUI, making management easy for admins at all skill sets

JBOF Disaggregated Storage

Outside of the server hardware we all know, SuperMicro also has a deep selection of storage hardware. Of the two storage specific segments of SuperMicro Build Day, I was most interested in the JBOF/NVMe storage. During this segment, Alastair spoke to Mike Scriber. To quote Mike, "I design really, really cool storage systems using NVMe. Very high density systems." And when you look at what SuperMicro is up to, he's right.

Utilizing the Intel Ruler NVMe form factor, SuperMicro is quickly closing in on 1 petabyte of storage in a 1U rack chassis. The chassis has slots for 32 "rulers" that connect into 16 lanes of PCIe leading to 4 ports which allows for 64gb/s bandwidth. Another interesting feature of both the ruler form factor and the standard U.2 chassis is the engineering of the back planes. The back planes run parallel to the ruler form factor, and across the top of the U.2 drives. This design helps to keep densely packed 1U chassis cool with limited to no blocks in the airflow.

There were a lot of aspects to the SuperMicro Build Day Live event worth checking out, lots more than commented on in this post! Check out the links below for all of the videos from the event.

Links:

SuperMicro Build Day - Condensed

vBrownBag YouTube SuperMicro Build Day Live Videos

The CTO Advisor - SuperMicro Build Day Interviews

Anthony Hook's SuperMicro Build Day Blog Post

vBrownBag.com - SuperMicro Build Day Live

vBrownBag on Twitter

SuperMicro's Web Site

If you'd like to continue the conversation about SuperMicro Build Day Live, do not hesitate to contact me via any of the channels provided below. Do you have an idea or a topic for the blog? Would you like to be a guest on the ExploreVM podcast? If so, please contact me on Twitter, Email, or Facebook.

Getting Started with StorMagic SvSAN - A Product Review

Getting Started with StorMagic SvSAN - A Product Review

Recently, I had the opportunity to try out StorMagic SvSAN in my home lab to see how it stacks up. The following is an introduction to SvSAN, a description of the deployment, testing, testing results and my findings.

What is StorMagic SvSAN 6.2?

StorMagic SvSAN provides a Hyperconverged solution that has been designed with the remote office/branch office in mind. Two host nodes with onboard storage can be utilized in a shared storage style deployment in locations where a traditional 3 tiered architecture would prove to be difficult to manage or too cost prohibitive. SvSAN is vendor agnostic so it can be deployed onto existing infrastructure without the need to acquire additional hardware. The two storage nodes can scale out to support up to 64 compute-only nodes. Licensing is straight forward: perpetual license per pair of clustered storage nodes as one license per pair. Initial pricing is also very accessible, starting at approximately $4,000 for the first 2TB license. Licensing and capacity can scale beyond the initial 2TB.

When asked about their typical customer base, StorMagic provided the following response: "StorMagic SvSAN is designed for large organizations with thousands of sites and companies running small data centers that require a highly available, two-server solution that is simple, cost-effective and flexible. Our typical customers have distributed IT operations in locations like retail stores, branch offices, factories, warehouses and even wind farms and oil rigs. It is also perfect for IoT projects that require a small IT footprint, and the uptime and performance necessary to process large amounts of data at the edge."

Technical Layout of SvSAN

A typical SvSAN deployment consists of the following base components: hypervisor integration, Virtual Storage Appliances, Neutral Storage Host. In my lab environment, I used VMware vSphere, but StorMagic does offer support for Hyper-V as well. A plugin is loaded into the vCenter Server and provides the dashboard for management and deploying the VSAs. Following the wizard, a Virtual Storage Appliance is deployed on each host and the local storage is presented to the VSA. Before creating storage pools the witness service (Neutral Storage Host) must be deployed external to the StorMagic cluster. The NSH can be deployed on a server, Windows PC, or Linux. It is light weight enough that it can run on a Raspberry Pi.

SvSAN 6.2 introduced the ability to encrypt data. A key management server is required for encryption. For this evaluation, I installed Fornetix Key Orchestration as the KMS. Encryption options available include encryption of a new datastore, encryption of an existing datastore, re-keying a datastore, and decrypting the datastore. As I was curious to as what kind of performance hit encryption may have against the environment, I ran my tests against the non-encrypted datastore, then again after encrypting it.

Deployment and Testing

The overall installation process is fairly straight forward. StorMagic provides an Evaluators guide which outlines the installation process, and their website has ample documentation for the product. I had to read through the documentation a couple of times to fully understand the nuances of the deployment. I did encounter a few hiccups during deployment, one IP issue which I resolved and a timeout on the VSA deployment. I did need to contact support to release the license for the Virtual Storage Appliance which timed out, but support was responsive and resolved my issue quickly. The timeout may have been tied to the IP issue as the VSA deployed successfully on the second attempt.

With the underlying infrastructure in place, a shared datastore was deployed across both host nodes. Now the testing could begin. A Windows Server 2012 R2 virtual machine was deployed on the SvSAN datastore to run performance testing against. The provided Evaluation Guide gives many suggested tests to put the SvSAN environment through its paces. As I mentioned previously, I ran the tests against an encrypted datastore, a non-encrypted datastore, and a local datastore.

Following the guidelines set forth by the Evaluation Guide, Iometer was the tool of choice for performance benchmarking. Below is a chart of the metrics used. Outside of the suggested performance testing I also ran various tests to see what the end user experience could feel like on a SvSAN backed server. These tests included RDP session into the VM, continuously pinging locations internal and external to the network, and running various applications.

The final tests ran against the SvSAN cluster included failure scenarios and how it would impact the virtual machine. Drives were removed, connectivity to the Neutral Storage Host was severed, iSCSI & cluster networking were removed. An interesting aspect to the guide is that it gives you testing options to cause failures that will affect VMs running on the SvSAN datastore so you can see first-hand how the systems will handle the loss of storage.

SvSAN Results & Final Thoughts

Performance testing ran against the VM on the SvSAN datastore provided positive results. I was curious as to whether passing through an additional step in the process would affect IOPS, but there were only nominal differences between the local storage and the SvSAN datastore. I found the same to be true when it came to running an encrypted versus a non-encrypted datastore. IOPS performance held steady across all testing scenarios.

The same was true with the user experience performance testing. While running Iometer, Firefox, a popular chat application, and pinging a website the following failures were introduced to no impact:

hard drives were remove

a Virtual Storage Appliance was powered down

an ESXi host was shut down

Connectivity to the Neutral Storage host was severed

I was impressed with my experience with StorMagic's SvSAN. From no prior exposure to running production ready datastores in approximately an hour. The solution performed well under duress. Overall, StorMagic SvSAN is an excellent choice for those in need of a solid remote office/branch office solution that is reliable and cost effective.

Lab Technology Specifications:

Two Dell R710s

24 GB RAM each

2x X5570 Xeon 2.93 GHz 8M Cache, Turbo, HT, 1333MHz CPU Each

One 240 GB SSD drive for caching in each host

Presented as a single 240 GB pool from the RAID controller

5 x 600 10k SAS drives configured in RAID 5

Presented as two pools; 400GB & 1.8 TB

VMware vCenter Server Appliance 6.5

VMware ESXi 6.5 U2 Dell Custom ISO

Cisco Meraki MS220 1GB Switching

Further reading on StorMagic:

SvSAN Lets You Go Sans SAN

This blog was originally published at Gestalt IT as a guest blog post.

If you'd like to continue the conversation about StorMagic SvSAN, do not hesitate to contact me via any of the channels provided below. Do you have an idea or a topic for the blog? Would you like to be a guest on the ExploreVM podcast? If so, please contact me on Twitter, Email, or Facebook.